Before we get started on a few good tips on how to attract Googlebots to your site, let’s start with what a Googlebot is along with the difference between indexing and crawling.

Before we get started on a few good tips on how to attract Googlebots to your site, let’s start with what a Googlebot is along with the difference between indexing and crawling.

A Googlebot is a piece of code referred as a bot that Google sends off to collect information about a document on the web so that it can be added to Google’s searchable index. Google has programmed this in a way to be flexible, so lets say I have a blog and I post once a month, after a while these Bots learns my pattern and only visit my blog page once a month, if I post content on a regular basis, these Google bots will come and fetch my page sooner.

Crawling and Indexing

is a process by which Google discovers new and updated pages that get added to their index. Google uses specialized software, called web crawlers that find and retrieve websites automatically. These crawlers are also referred as bot’s, or spiders. These bots go to sites that have joined Google’s Webmaster tools and take a snapshot of a page along with some data and store it in a database.

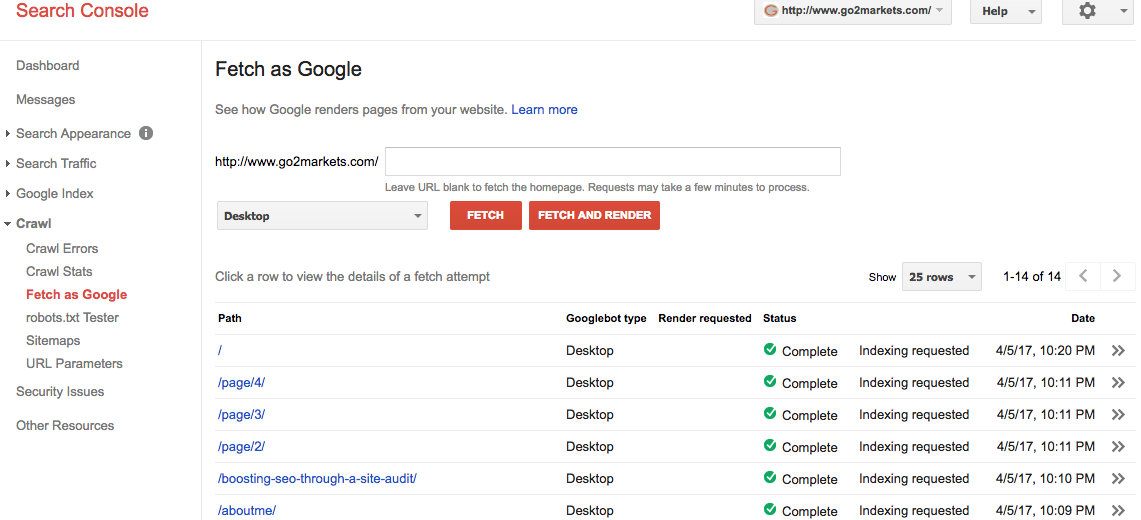

Assuming you’ve just created a new website or written a blog, there are 2 ways this can happen – one, wait until Google sends its bots to crawl and index your page or two or initiate a google crawl on your own. The Fetch as Google tool enables you to test how Google crawls or renders a URL on your site (See Image below). You can use Fetch as Google to see whether Googlebot can access a page on your site, how it renders the page, and whether any page resources (such as images or scripts) are blocked to Googlebot.

This tool simulates a crawl and render execution as done in Google’s normal crawling and rendering process, and is useful for debugging crawl issues on your site.

Submitting to index is highly recommended as it helps webmasters for Googlebots crawls your site pages and index immediately. This process helps by advancing your site and relevant pages on SERP.